Jeff Hawkins is a polymath for me. He was so frustrated that AI research wasn't grounded in how brains actually work, he spent decades studying neuroscience while building his tech companies. The culmination of his learning journey came when he wrote this book with Sandra Blakeslee, defining what intelligence is so that we can better mimic it (if ever).

Unsurprisingly, the book argues that despite massive computing power, machines lack true intelligence because we've been building them wrong. We first need to understand and replicate the brain's actual algorithms. (Read more by clicking the title.)

My Notes

Why Machines Aren't Intelligent (Yet)

Hawkins says intelligence is not about behavior. It's about memory-prediction.

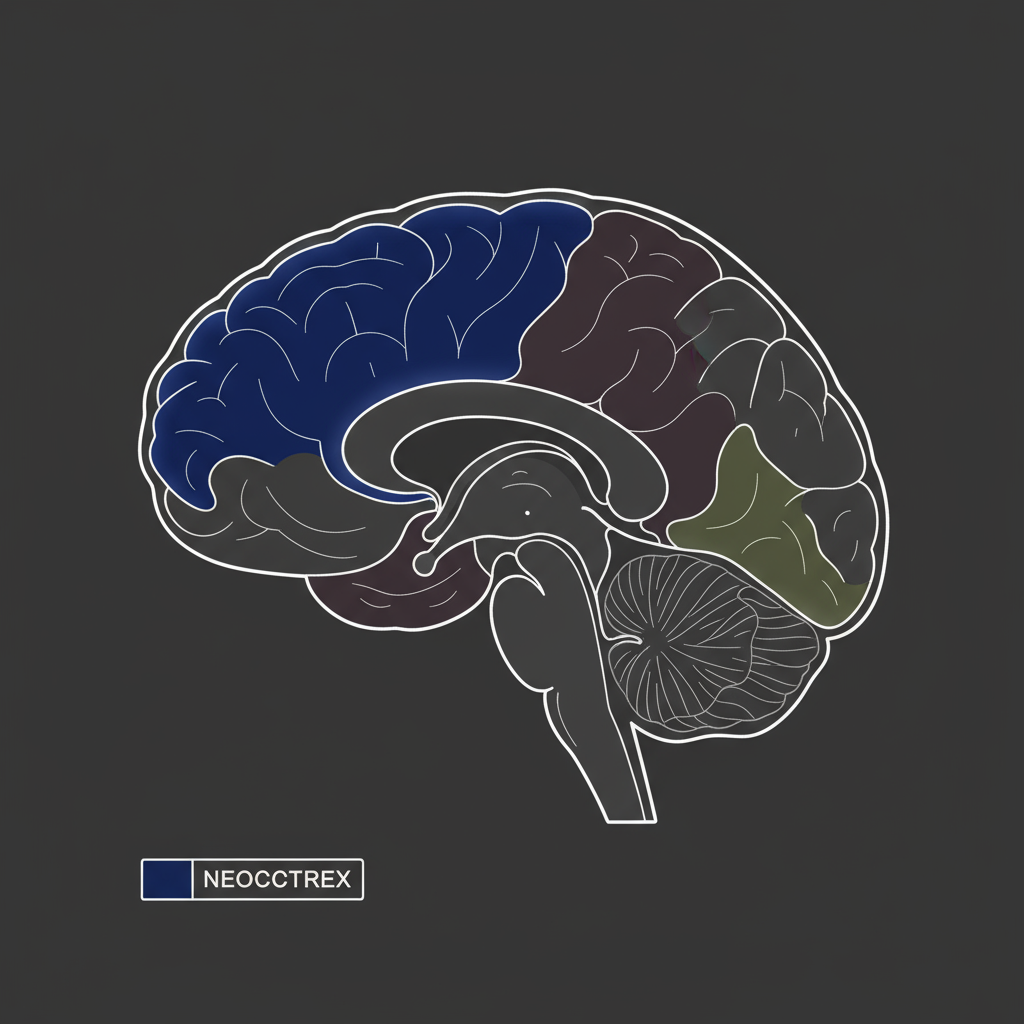

And he pulls from the neocortex to back this up. The neocortex is a human memory system that stores sequences of patterns hierarchically. It constantly predicts what happens next. And behavior is just the byproduct of this pattern-prediction.

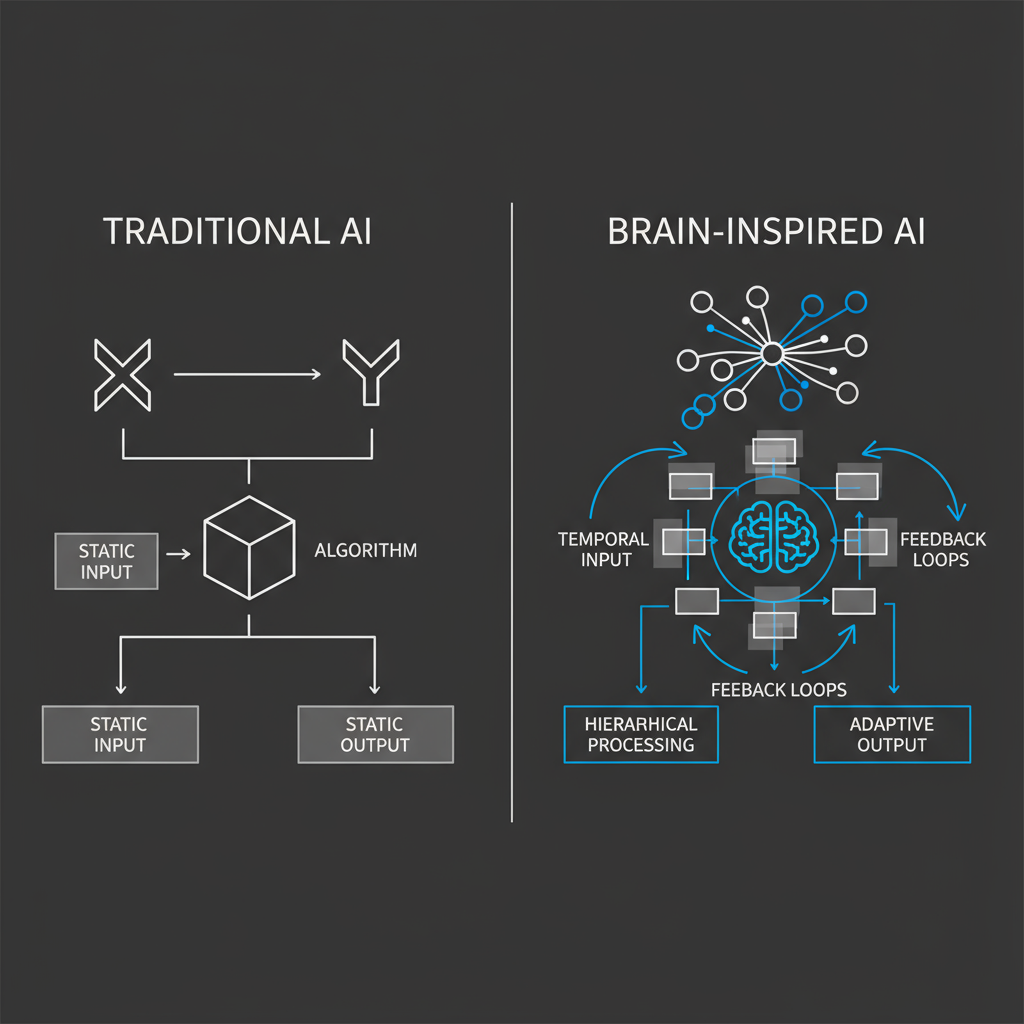

This is in stark contrast with the traditional views on how AI should work, which consider behavior and problem-solving as the core of intelligence. Early brain models (Walter Pitts' neuron included) assumed the brain was a logic circuit: inputs turn into outputs. And expert systems and neural networks followed a similar base architectural framework. But Hawkins calls intelligence temporal, predictive, and hierarchical. More than just a bunch of "trues" and "falses" strung together.

The Neocortical Algorithm

The neocortex uses the same basic structure everywhere. Visual, hearing, and even touch; they all look identical under a microscope. Same structure, same algorithm. Using this premise, we can conclude that the difference is not in the processor; it is in the input.

Neuroplasticity research bolsters this argument. In Dr. Mriganka Sur’s experiment in 2000, scientists rewired visual signals into ferrets' hearing brain regions. The auditory cortex learned to process sight. Blind people, too, use their visual cortex to read Braille. And you might be wondering what is the main argument? Straightforward. The brain runs the same program everywhere, just on different inputs: vision, sound, touch.

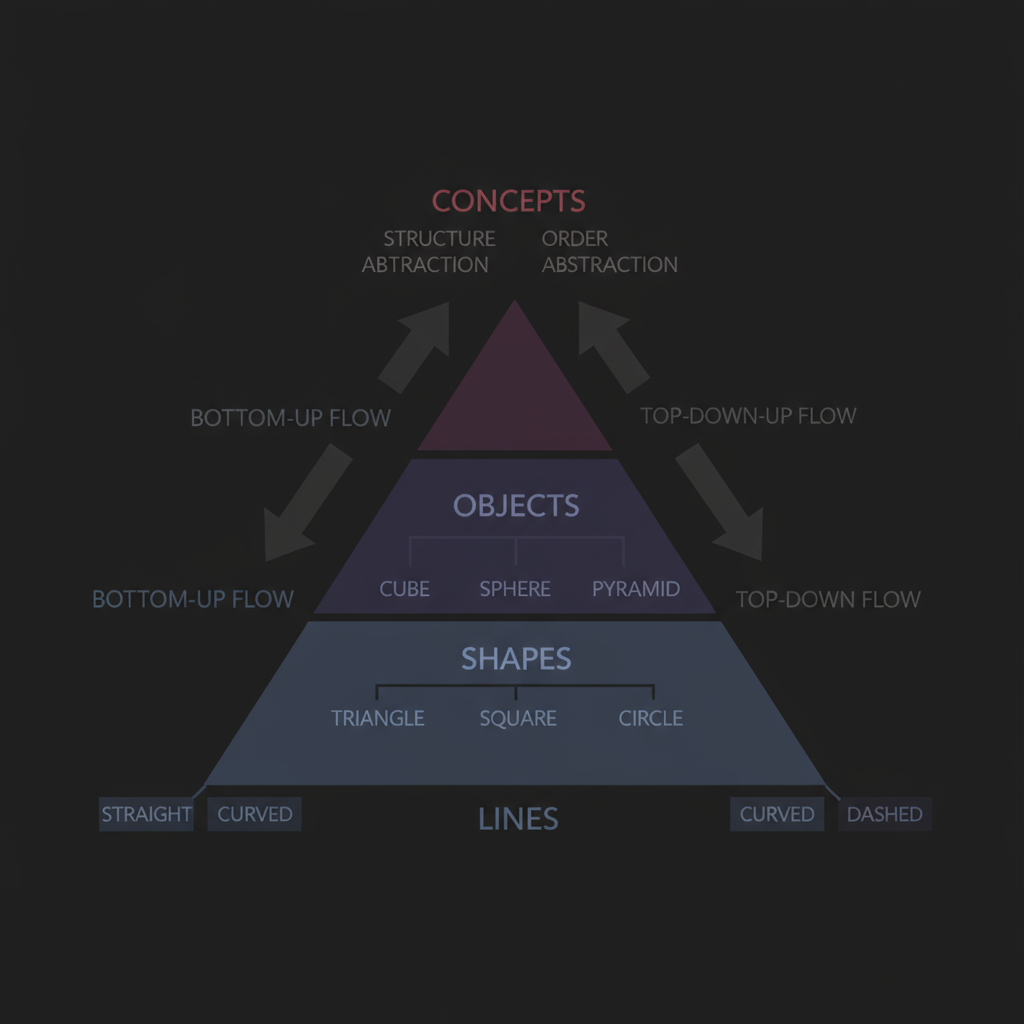

And that algorithm is hierarchical. Try to imagine building blocks stacked on top of each other:

Bottom: Senses pick up pieces. A line. A sound. A touch.

Middle: Pieces combine. Lines become shapes. Sounds become notes. Touches become textures.

Higher: Combinations form complete things. Shapes become objects. Notes become words. Textures become fabric.

Top: Your brain connects ideas. Understands relationships. Thinks abstractly.

Each layer learns patterns and predicts what's next. Bottom predicts simple things. And top predicts complex ideas.

Information flows both ways, not a one-way line from your eyes to your thoughts. Two things happen simultaneously:

Bottom-up (from senses to brain): Your eyes see shapes, colors, lines. That raw data travels up to your brain.

Top-down (from brain to senses): Your brain already has expectations about what you're about to see. Those expectations travel back down.

You're reading this sentence right now. The lower part of your visual system is identifying individual letter shapes: "Y", "o", "u". But the higher part of your brain is already predicting what word comes next based on context. It's sending that prediction back down to the lower areas, essentially saying "expect to see these letter patterns."

When your prediction matches what you actually see, reading feels effortless and neat.

But when there's a mismatch (say, the sentence includes an unexpected word), we reach an interesting situation: your attention is raised. You pause. Maybe you even feel provoked. And that mismatch is a signal to your brain that your visual cortex has detected something extraordinary. So your little brain cells start doing what they are supposed to: learning. In a nutshell, learning happens when you are exposed to unfamiliar settings, the ones your brain can’t predict.

This enables invariant representations—recognizing patterns despite changes in details. You recognize a friend's face from any angle, under different lighting, partially obscured. You identify a song played at different tempos or on different instruments. Lower levels handle the variable details. Higher levels extract what stays constant. The brain doesn't store every possible variation—it stores temporal sequences of how patterns typically unfold.

But your brain doesn’t memorize every single version of something. It would be inefficient. So it learns the pattern of how things usually happen.

For example, you can recognize your cat even if it is half covered in fabric. Or you identify the road signs when driving despite partial visual obstacles (say, the paint is vanishing). You didn't memorize the entire collection of road signs in every possible condition. You learned the underlying pattern of what makes that road sign that exact road sign.

Same with music. You can recognize "Binary Sunset of Star Wars" whether it's played on a piano or hummed by someone. Fast or slow. Different key. Doesn't matter. You stored the sequence of how the melody moves, not every specific version of it.

The bottom layers of your brain handle the details that change—the specific lighting, the exact instrument, the particular angle. The top layers extract what doesn't change—the fundamental structure, the core pattern.

So when something varies slightly, your brain doesn't get confused. It matches the new input against the stored pattern. The details are different, but the sequence is the same. That's why you can still recognize it.

Prediction as the Core

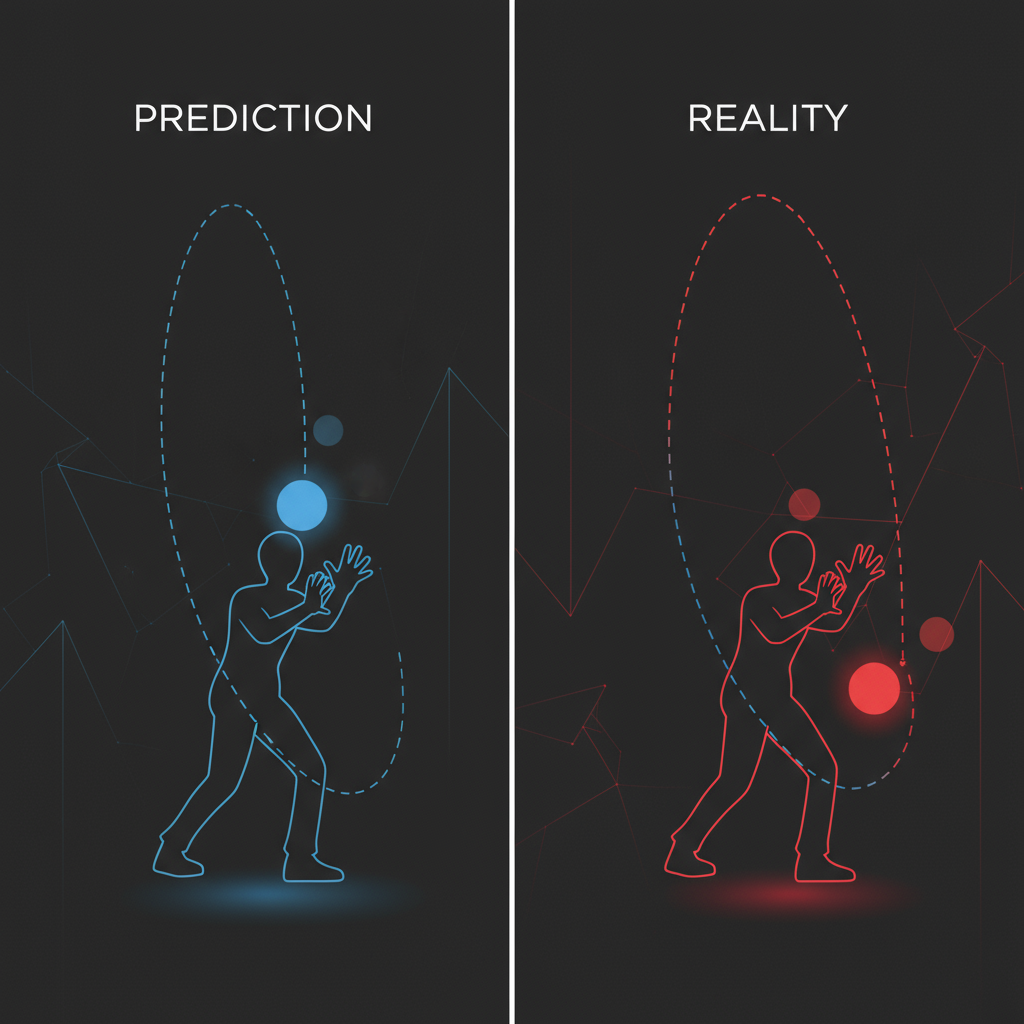

Hawkins further diverges from traditional views when he concludes that intelligence isn't reactive. And that it's a proactive prediction.

Your brain predicts constantly. And it is not limited to major events solely. It runs minor predictions too. The next word you'll read. How your coffee cup will feel when lifted. What you'll see when you turn your head. And even the tone of that aggressive teacher when he opens his mouth to speak.

These predictions happen automatically, at every level. When predictions confirm, information flows smoothly—you experience fluency, understanding, and possibly expertise. When predictions break, you feel surprise, confusion, that jarring sensation when someone makes a great joke that catches you off guard.

This is auto-associative recall. Hear "Jingle bells, jingle bells" and your brain completes "jingle all the way" before you hear it. Your brain basically predicts the entire rhyme of the song after hearing the first few words alone. And this is not really optional (reactive). It is built into the system. That’s how it operates.

Traditional pattern matching merely identifies what something is. Prediction tells what something will become, how it will behave or act. The difference is critical. The former is similar to recognizing a chess position; the latter, understanding the game. The former is matching "C-A-T" to a stored image, and the latter, knowing that in English text, "the c_t" will almost certainly complete as "cat."

Intelligence is fundamentally about building increasingly accurate internal models of how the world works. A self-learning model. Behavior emerges from these models as a consequence, not as the goal. When you speak a word in a foreign language, your brain's predictive model generates language commands that predict what the next word should be. The intelligence is in the model, not the behavior itself.

A little footnote: I had watched this video by Kurzgesagt. I remembered it talked about a similar concept, but from a different light.

Why Traditional AI Missed the Mark

Expert systems encoded human knowledge as rules. IF this THEN that. They could diagnose diseases when conditions matched their rules perfectly. But they had no understanding, no internal model, no ability to predict novel situations. They were lookup tables dressed up as intelligence. They failed because intelligence isn't about memorizing responses—it's about building models that generalize. Rote learning is the lack of learning.

Early feedforward neural networks improved by learning patterns from data rather than hand-coded rules. But they were static: input → hidden layers → output. Had a limited hierarchy and no temporal processing in their architecture. Recurrent networks like LSTMs could handle sequences, but they learned statistical correlations in temporal data without building the hierarchical, bidirectional, predictive memory structure Hawkins describes.

Traditional AI treated intelligence as a math problem. Put data in, get the answer out. Even systems that handled sequences (say, predicting the next word) processed information in one direction. The neocortex doesn't work that way. It constantly sends predictions downward while sending sensory data upward, at multiple levels simultaneously.

Real intelligence processes information while simultaneously unfolding constant feedback. Words form sentences while upper levels predict what comes next and shape how lower levels interpret the incoming data. Simple patterns turn into complex ones across multiple hierarchical levels simultaneously.

This is why classic AI systems seemed brittle. They worked in narrow domains but shattered when conditions changed slightly. They didn’t have the integrated temporal-hierarchical-predictive architecture (or whatever that is) that makes biological intelligence simply better.

What This means

Hawkins reframed the question. Intelligence has nothing to do with how well you perform tasks (say, learn). It is about the internal model you use to attack that task. A model that can predict properly within contexts, that builds hierarchical systems, and that learns temporal sequences. That's closer to genuine understanding than a system that merely computes correct answers through brute force.

This matters for evaluating modern AI. GPT-4, Claude, and similar models generate criminally human-like text. They can solve complex problems. But do they understand?

They predict the next tokens based on statistical patterns in training data. Similar to Hawkins' prediction framework. But they lack the hierarchical temporal memory structure he describes. They don't build explicit internal models. They learn via controlled training on human-labeled data, not unsupervised experience of the world.

The debate over whether LLMs have genuine understanding versus sophisticated pattern matching is exactly what Hawkins was talking about. That’s the difference between behavior and intelligence.

On Learning

If intelligence is prediction, then learning is the continuous refinement of predictive models through exposure to sequences.

Expertise isn't memorizing facts. It's having rich, detailed predictions in a domain. A chess master doesn't calculate more moves—they recognize patterns and predict promising continuations instantly. A musician doesn't decode sheet music note by note—they predict melodic and harmonic sequences. Mastery equals accurate, automatic prediction.

This changes how I think about confusion and insight. Confusion signals prediction failure. Your model doesn't fit the data. That jarring feeling when information contradicts expectations—that's the learning signal. Insight is when you update your model and predictions suddenly align with reality. The "aha" moment isn't mystical. It's a successful model revision.

Limitations

The neocortex isn't as uniform as Hawkins suggests. There's more regional specialization (visual inputs mostly) than the book admits; the visual cortex has unique structures for handling space and depth, and language processing happens mostly on the left side of the brain. And Hawkins also focuses almost entirely on the neocortex while ignoring other brain regions. The cerebellum handles movement timing. The basal ganglia selects which actions to take. The hippocampus stores memories of specific events. The thalamus directs attention. And intelligence needs this entire setup to be working all the time, not just the neocortex itself.

Twenty years later, Hierarchical Temporal Memory hasn't changed AI the way Hawkins predicted. Meanwhile, modern AI systems (including GPT-4, Claude, DALL-E) achieve impressive results using completely different architectures. They don't build the kind of memory-prediction structures Hawkins describes. Yet they predict text, generate images, and show capabilities that look like reasoning.

Two possible conclusions: either Hawkins' framework isn't necessary for intelligence, or these systems are just very advanced mimics without real understanding (and it also depends on how you define understanding/intelligence). And Hawkins would agree with the latter.

None of this means his core ideas are wrong. Prediction, hierarchy, processing sequences over time, and information flowing in both directions are all crucial to understanding intelligence. Hawkins' findings are accepted in Neuroscience. That his specific implementation didn't dominate AI doesn't mean the principles are wrong. It might mean there are multiple paths to intelligence. Not only one.

Conclusion

Behavior can be faked. Understanding requires models/architectures that predict and transfer to new situations.

The debate continues. Do modern AI systems actually understand, or just match patterns at a massive scale? Can we reach human-level AI by scaling current systems, or do we need the principles Hawkins describes, the ones brains actually use?

We're building incredibly capable systems without knowing if they're intelligent the way brains are.

Hawkins didn't solve intelligence. But he asked the right questions and pointed toward principles any theory of intelligence must address.

So are we building intelligence or simulating it? Does that distinction even matter when the imitation is flaw-free?

Thank you for reading this post!